In this case study, I’m going to show you how, over the course of 5 months, we grew the ad revenue on a content website by 373% (from $669 to $3,162).

It would be an excellent result anyway, but we had to overcome a major obstacle (site-killer level!) when we acquired this project.

The website was on a massive decline, and all signs pointed to the site having been affected by some sort of algorithmic penalty.

The game was on!

Listen & Watch Case Study in the video here, or for the full step by step approach (so you can implement these changes on your own site for growth), go through the full article below video, it will knock your socks off!

The Client and Looming Disaster

This client had just purchased a content website in a broad Home & Garden niche. The deal went through and escrow released the funds to the previous owner on November 20th.

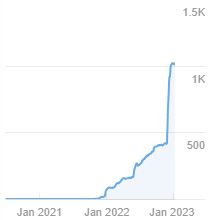

It may have been just a coincidence, but literally two days after the purchase, the website started getting A LOT of backlinks from random sources. Just have a look at this Ahrefs link graph:

As you can see, the site was already on a slippery slope, but very shortly after the influx of backlinks, it started losing traffic even faster!

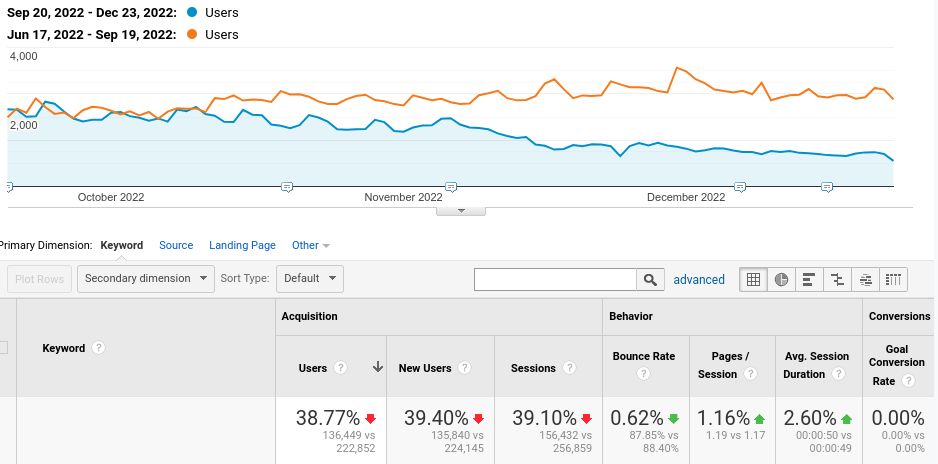

But don’t trust Ahrefs and their estimations! Here’s a comparison from Google Analytics:

The earnings plummeted from $2,000 USD a month in June-September to $893 in December and then down to just $669 in January.

Anyone would’ve freaked out if a website they just bought for high 5-figures lost two-thirds of its revenue in a matter of months! (Check our website valuation tool to find out how this had affected the site’s value.)

The situation was looking dire.

Project Beginnings and The Team

The client hired us around mid-December, and, of course, we got to work right away. I’m talking about our process, actions taken and implementation below, but first let me introduce the team.

B.O.B. meets Rad Paluszak – Technical SEO Artist

I’ve collaborated with Rad Paluszak on SEO for several projects now, and we’ve been friends for a while. He’s an expert in SEO, but his true secret weapon is his web dev and software engineering background.

Boy, did he put those programming skills to work here! Keep reading to see how!

Back then (December 2022), I’ve also been prepping to launch B.O.B. SEO with support from Rad and his team. This challenging project with a tanking site was the perfect opportunity to battle test our processes.

Good news – B.O.B. SEO Service Is NOW LIVE and ready to help you too!

For clarity, I am writing most of this case study, but the whole team delivered these results – kudos to everyone involved. Since Rad cracked the code on key parts (especially WordPress API and translation), I invited him to explain those sections himself.

Rad has also been a guest on my podcast, so make sure to watch them later!

- Ep 199: Backlink Due Diligence & Tech SEO

- Ep 220: How To Make AI Content Truly Valuable

- Ep 222: SEO Strategy For Flipping Websites

Now let me reveal our strategy for this campaign!

Strategy, Action & Implementation

I’ll dig into all the steps we took, but first, here’s a quick list of our action plan – a breakdown of what you’ll learn today:

- SEO Audit and Strategy:

- Technical analysis and implementation

- Google Search Console audit

- Keyword Research (KWR)

- Link Profile analysis and Link Requirement Plan (LRP)

- Content Plan

- Penalty Analysis

- Analysis towards a potential negative SEO attack

- Testing different approaches

- Planning for the site’s growth

- Improving the monetization

Get ready because we’re about to get into the nitty-gritty details!

SEO Process

Every SEO pro chants the mantra that each campaign is totally unique. And it’s true!

But even though every website needs a custom strategy, there are some standard steps. You gotta nail down the basics before moving forward.

It’s like cooking. To whip up any dish, you start with a list of ingredients, shop for them, then prep your cooking station.

You follow the process.

Same goes for SEO. Every site has its own quirks and issues. But the process for tackling them usually looks similar.

In our SEO process, we kick things off with an audit – no matter if it’s a huge ecommerce site, content site, or just a humble blog.

The audit is crucial because how can you help a website without understanding it first?

SEO Audit

I nerded out hard and wrote a monster guide on SEO auditing over in the SEO Strategy Blueprint, so check it out if you’re thirsty for more audit knowledge.

Alright, let’s dive into how we audited this particular site!

First up, you’ll need a few key tools:

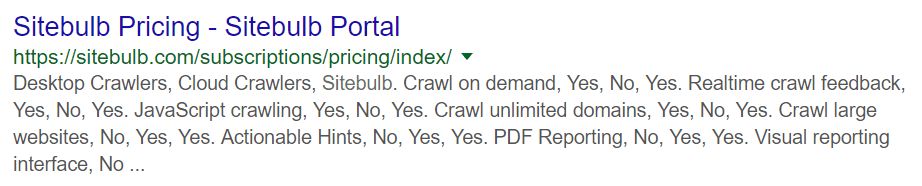

- Sitebulb for crawl analysis

- Google Search Console (GSC) for traffic insights

- PageSpeed Insights (PSI) to test site speed (although Sitebulb runs it automatically, too)

- Ahrefs to assess backlinks

With just these, you can run a killer audit.

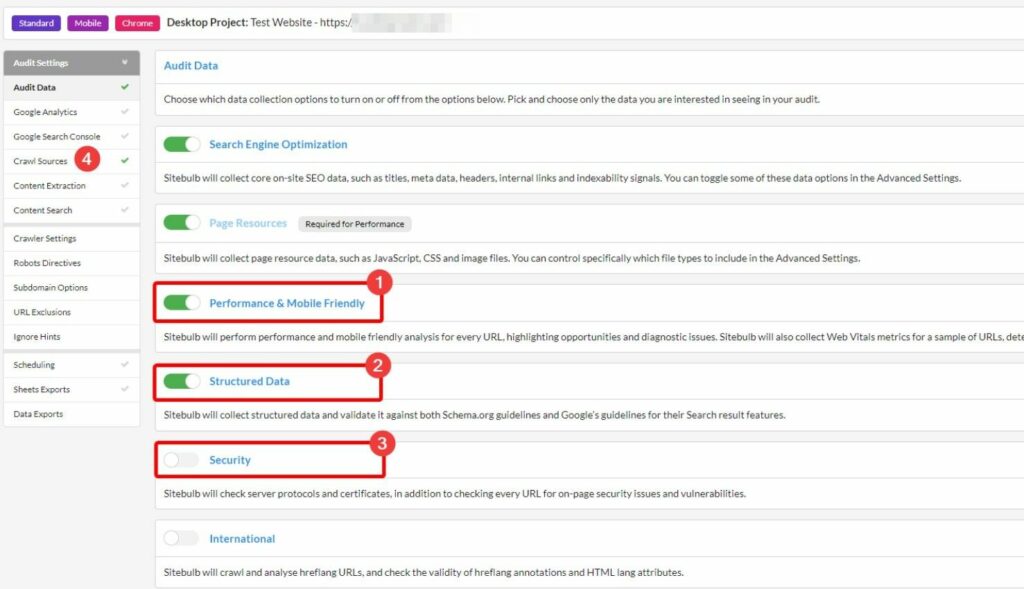

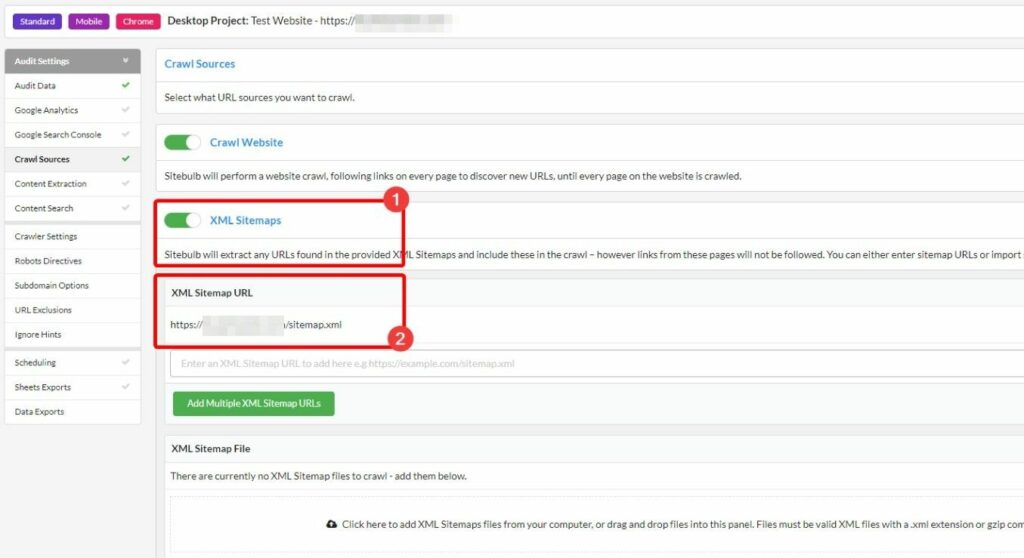

We started with a Sitebulb crawl to uncover technical issues.

The Sitebulb team has a fantastic guide on crawl settings, but here are a couple pro tips:

- Validate structured data – Turn on these options:

- Add an XML sitemap as a URL source (4) – Go to Audit Settings > Crawl Sources and select “XML sitemaps”:

If the site has a sitemap, you’ll see its URL pop up automatically (2). If not, make a note to create one!

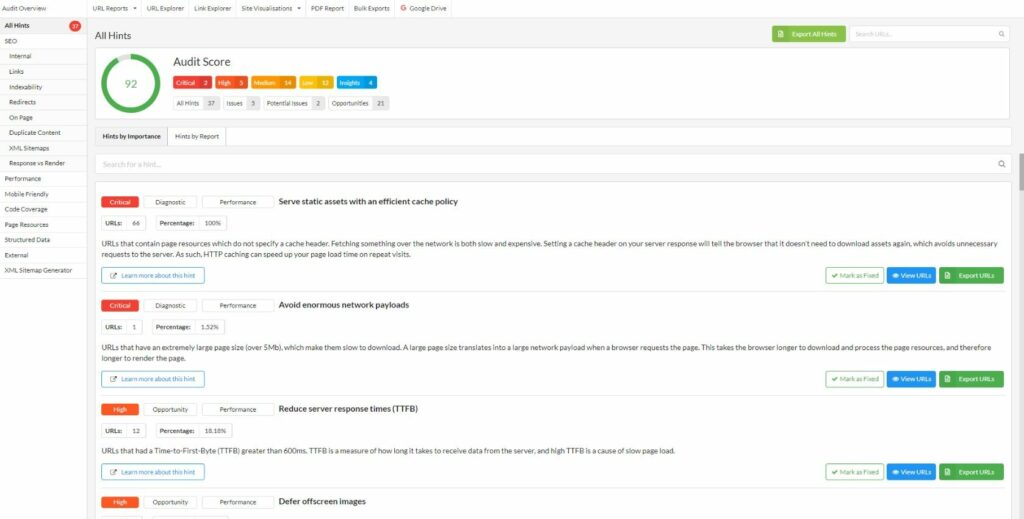

After the crawl is done, you’ll see an audit summary like this:

With this, you get a short description of the issue, it’s severity and the percentage of affected pages.

It goes without saying that you should prioritize issues marked as “Critical” with a high number of page coverage.

In our case, we tackled these issues:

Duplicate Content

Having duplicate content is a big no-no in SEO. It’s like having identical twins – hard for search engines to tell apart.

Google really dislikes duplicate content. Even Google says that 60% of the whole Internet is duplicated:

Google’s crawling process is highly focused on removing duplication because 60% of the internet is duplicate 🤯 @methode #seodaydk pic.twitter.com/OJ9OkP74DU

— Lily Ray 😏 (@lilyraynyc) March 30, 2022

Widespread duplicate content could affect the overall site quality signals, which may decrease the whole site’s organic traffic.

Even though Google can handle it with their fancy algorithms (5 steps of crawl data deduplication), as an SEO it’s your job to prevent duplication.

After crawling the site with Sitebulb, we found duplicates by checking for:

- Duplicate page titles

- Duplicate meta descriptions

- Duplicate H1s

- Duplicate main content – yes, Sitebulb helps with that, too.

How can you fix duplication? A few options:

- Delete the duplicate and redirect its URL to the original.

- Use a canonical tag to point to the original.

- Noindex the duplicate.

Whichever you choose, don’t forget to update internal links to only go to the original – not the duplicate page URL.

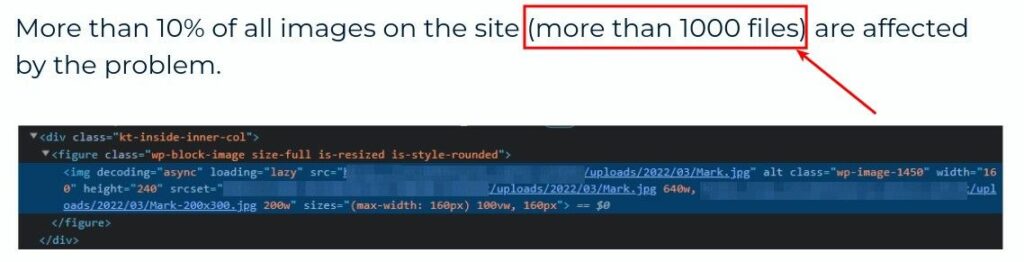

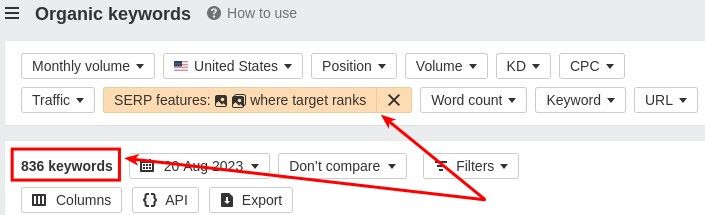

Images with missing alt text

I usually don’t sweat over this one too much.

And frankly, it annoys me when every lame SEO software or spammy sales pitch with bad English (you know which ones I’m talking about – they usually go something along the lines: “Hi Sir, I founded SEO mistakes on your high quality website and I am reaching out to acquaint you to our exception service in SEO field”) warns about missing alt text.

But in SEO it’s about calculating these three things:

Issue Severity

vs

Implementation Difficulty

vs

Potential Benefits

So trust me, if you see something like:

It’s probably worth adding those alt tags.

It took us some time, but we eventually fixed all missing alt tags – in both the WP media library and static images in code templates.

This helped us jump from 71 image pack rankings to over 830:

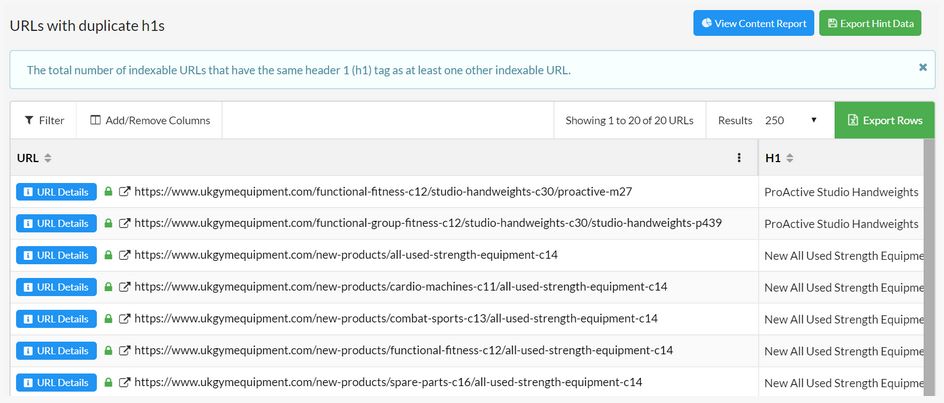

Duplicate or Multiple <h1> Tags

Similar to duplicate content, keep an eye out for pages that have the same H1 tag or multiple H1s, even if the content is different.

The H1 tag helps users and search engines quickly grasp what a page is about.

If multiple pages have the same H1, search engines may struggle to determine the best page for a query. This can cause keyword cannibalization – where your own pages compete for the same terms.

In summary:

- Identical H1s = Confusing for search engines

- Can lead to own pages competing against each other

- May also signal duplicate content issues

You can easily find duplicated and multiple H1 tags in Sitebulb – here’s what it looks like in the report after the crawl:

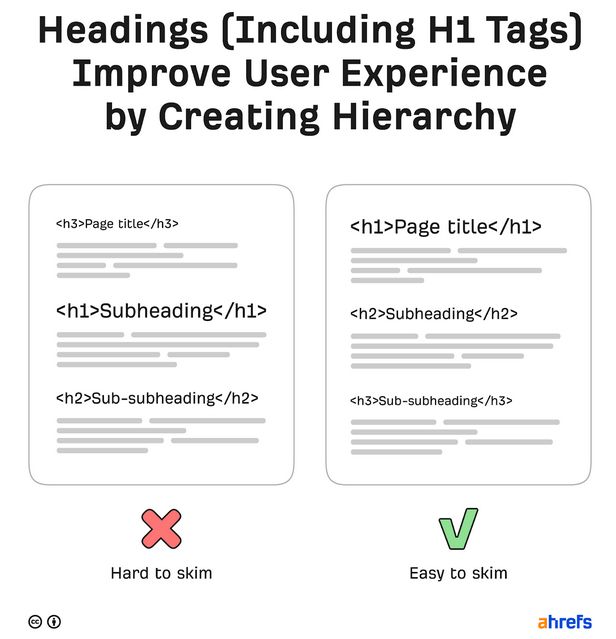

Best practice is to only have one H1 per page. Most SEOs will tell you to stick to that.

When fixing the H1s here, we also made them keyword-rich and reflect user intent – not just for SEO but for better user experience.

Additionally, we made sure all post headings followed a semantic structure. This allows users to more easily skim the content.

Ahrefs has a great article explaining how proper headings improve blog post formatting and readability:

https://ahrefs.com/blog/how-to-format-a-blog-post/

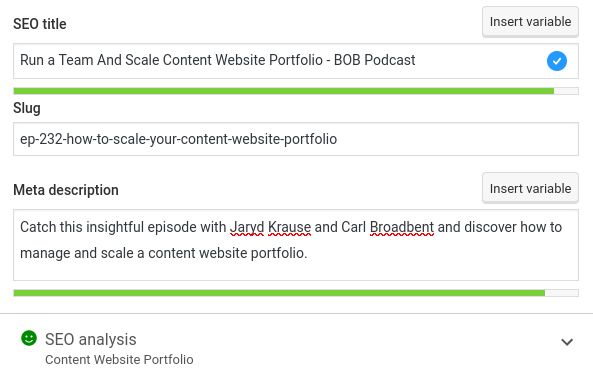

Missing meta descriptions

Search engines don’t use meta descriptions for rankings. But they’re still important for:

- Snippet Previews – Searchers see the description on Google to evaluate page quality before clicking. So meta descriptions influence click-through rate (CTR).

- Social Shares – Descriptions are shown when people share links on social media. Again, they impact CTR there.

If the meta description is missing, it’s a missed optimization opportunity. And what’s worse, search engines may generate bad auto-generated descriptions like this example from Sitebulb:

Our process:

- First, we found affected pages with Sitebulb.

- Second, we determined if those pages were needed in Google. If not, we noindexed them.

- Third, we wrote new descriptions focused on maximizing CTR.

- Finally, we added the new meta descriptions.

Here’s how you do it in Yoast SEO:

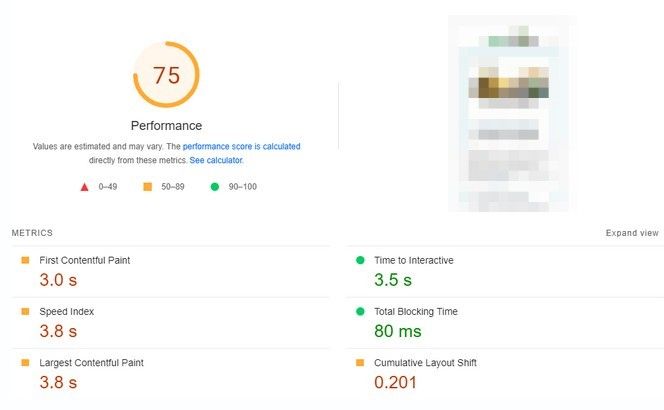

PageSpeed & Core Web Vitals

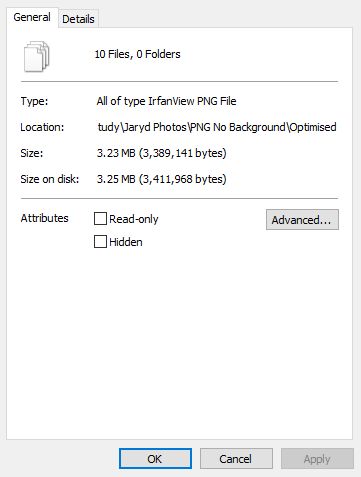

The site’s speed results were decent at first glance:

But we couldn’t settle for decent – optimization was needed!

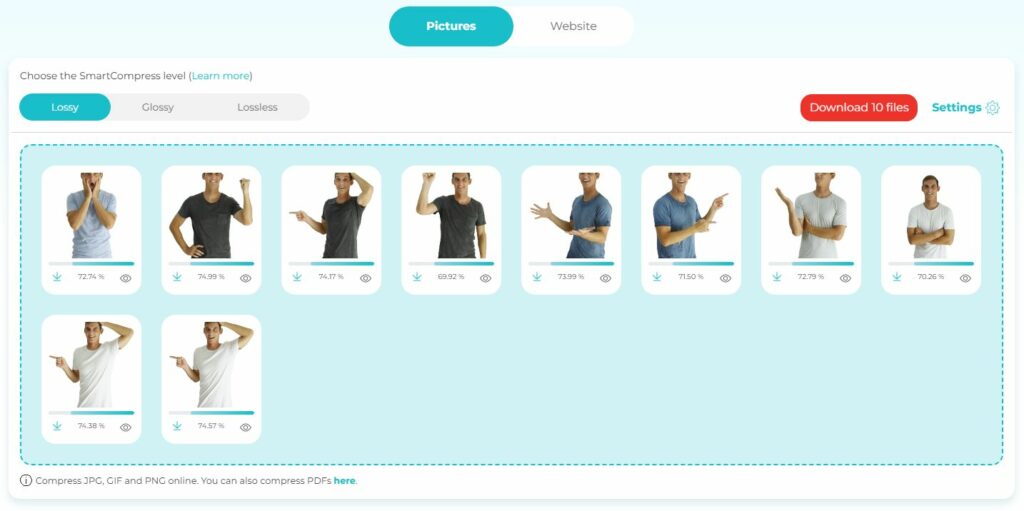

Optimizing images is my first move. It’s so quick and easy – can’t skip it! Plus, it’s pretty much automated.

I use ShortPixel to optimize images, either through their WordPress plugin or website

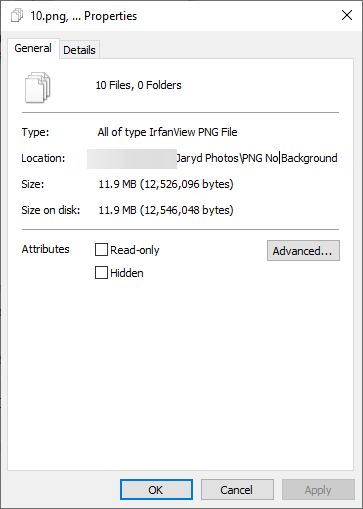

Check out ShortPixel compressing some of my photos:

Their size before: 11.9MB

And after: 3.23MB (saving 72.86%):

We also used WP Rocket to tweak it a bit more (I wrote the whole guide to it here) and – bam! – much faster:

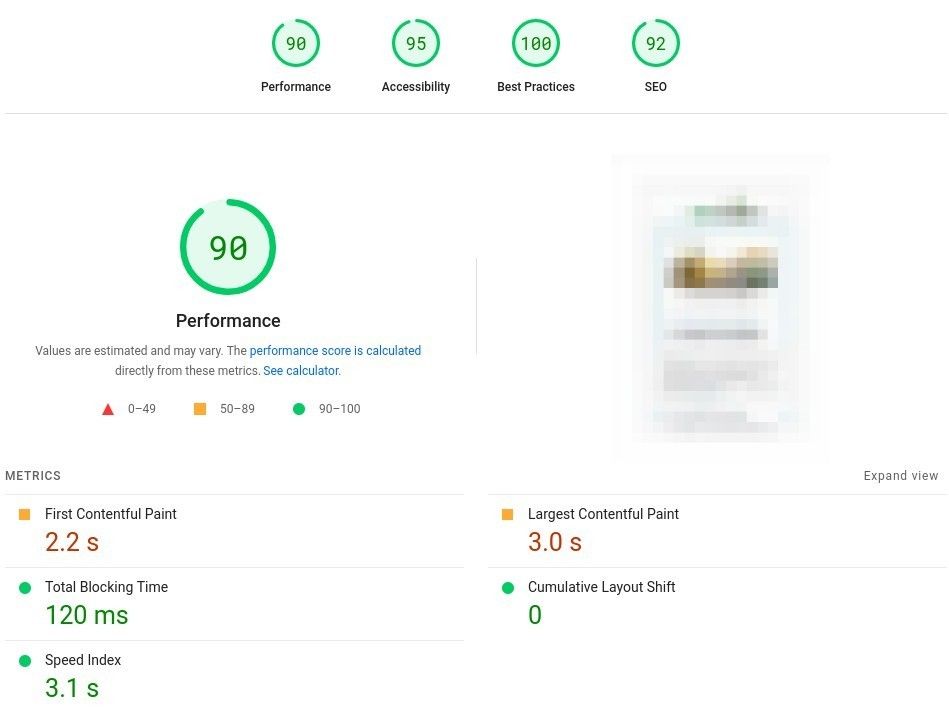

Most importantly, we now passed the Core Web Vitals assessment:

That’s exactly what you want to see!

Site Structure

Since this was a content site covering a wide range of topics, having a solid content structure was crucial. Information architecture (IA) and site structure work hand in hand. You want everything neatly organized so users (and Google) can find things easily.

Imagine walking into a giant, messy department store. Socks are next to blenders, toys beside perfume. You’d be confused and frustrated navigating that chaos!

That’s why stores have organized sections and signs. Just like stores need good IA, websites do, too.

In short, IA is like the blueprint for a site. It shows how content and features connect, so people can intuitively find what they want.

Here are some key parts of website’s IA:

- Navigation – Like store signs pointing to shoes or kitchenware. Helps guide users.

- Categories – Like sections of a store eg. Fiction, Kids. Allows narrowing.

- Site search – Lets users jump to what they want, like asking a store clerk.

- Labels – Clear, understandable terms users search for. Not jargon.

- User paths (optional) – The journey from entering a site to making a purchase.

So if someone says they’re working on IA, they’re organizing the site to make it easy to find things – like keeping socks in the sock aisle! Makes sense, right? 😉

In this case, the site had 530 articles but only 5 categories.

Doing the math:

530 / 5 = 106

…you can see the categories were overloaded! Not great for users or Googlebot.

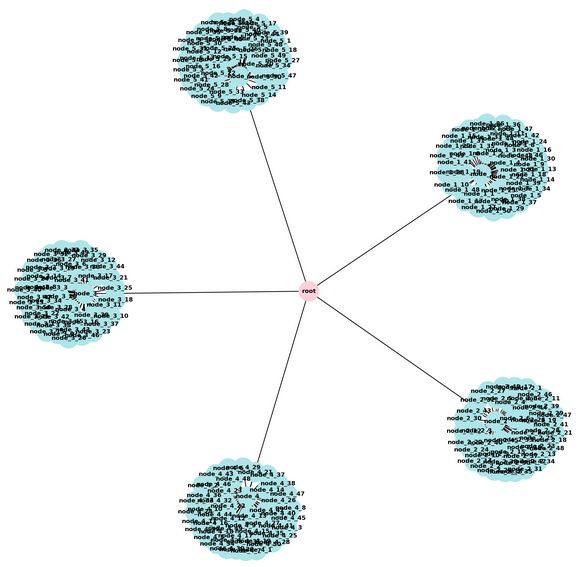

Here’s a simple visualization of the site’s structure before:

You can barely see the articles! Same issue Google had.

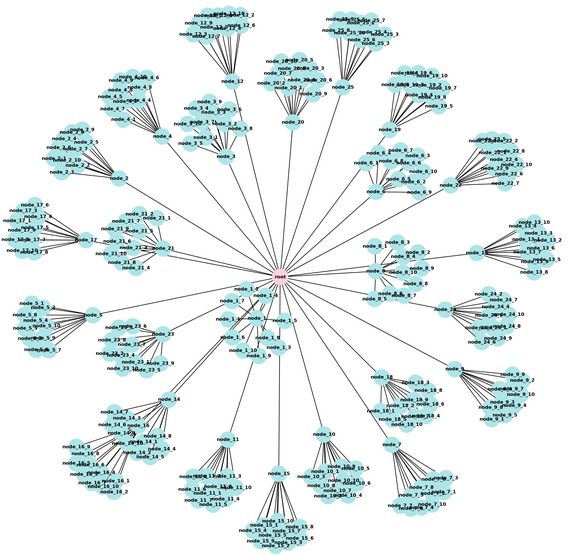

We planned adding 20 more categories and subcategories initially. Then a few months later, we introduced 35 more.

Here’s the improved structure:

Much more organized!

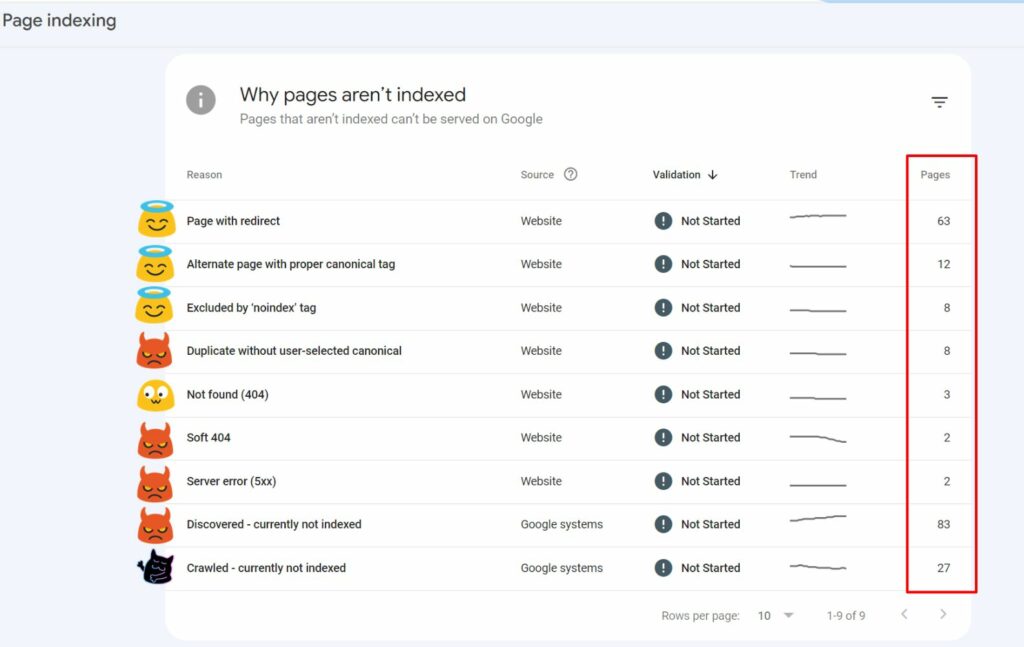

Google Search Console Audit

When auditing a site, always check Search Console – it’s a goldmine of SEO data!

Pay attention to page indexing. This shows any crawl or indexation issues and what Google knows about the site.

For example:

I added emoji to the issues to show different types:

- 😇 Info – Usually nothing to worry about and it just confirms stuff for Google. Review them, but often no big deal.

- 😳 Attention – In most cases, no action needed but review closely just in case.

- 😈 Problem – Something you should fix here. Don’t ignore!

- (dark) 👿 Big problem – Should NOT be happening. Major issue to address.

Also see the “Pages” column. Even severe issues may only affect a small number of pages.

You should focus on the big issues with a high number of affected pages.

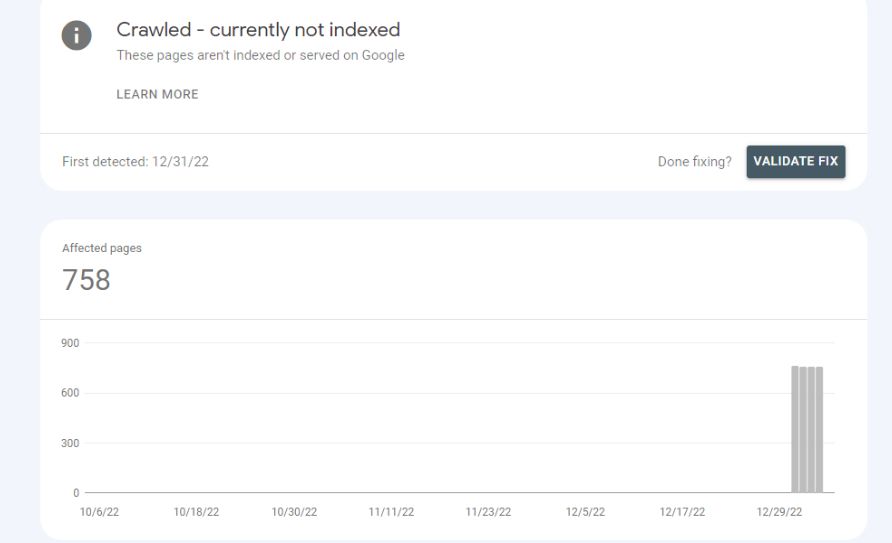

Now, in our case, the biggest problem was under the (dark) 👿 Crawled – currently not indexed category:

Our conclusion was that because there was insufficient navigation to deeper blog articles, Google wasn’t too interested in all of the content.

If you ever get similar thoughts, go back and study Site Structure section again 😉

Penalty Analysis

Once the audit was done and issues fixed, we really dug into the penalty problem.

The audit and implementations were completed in month one. But with changes still needing time to index, not much had improved yet.

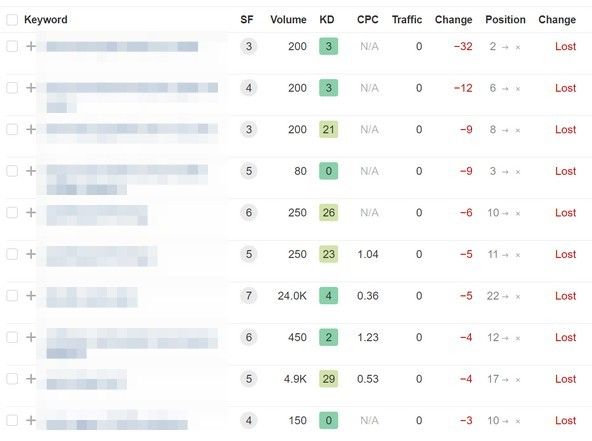

We were still losing keywords:

And traffic:

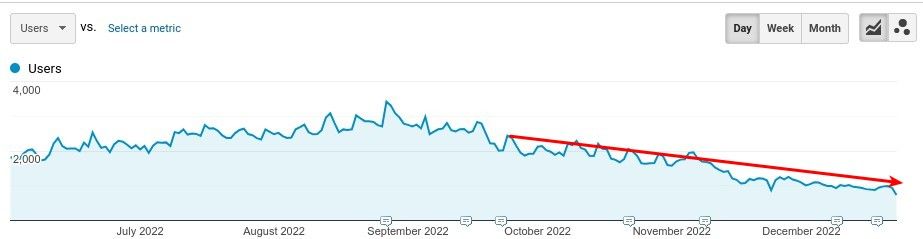

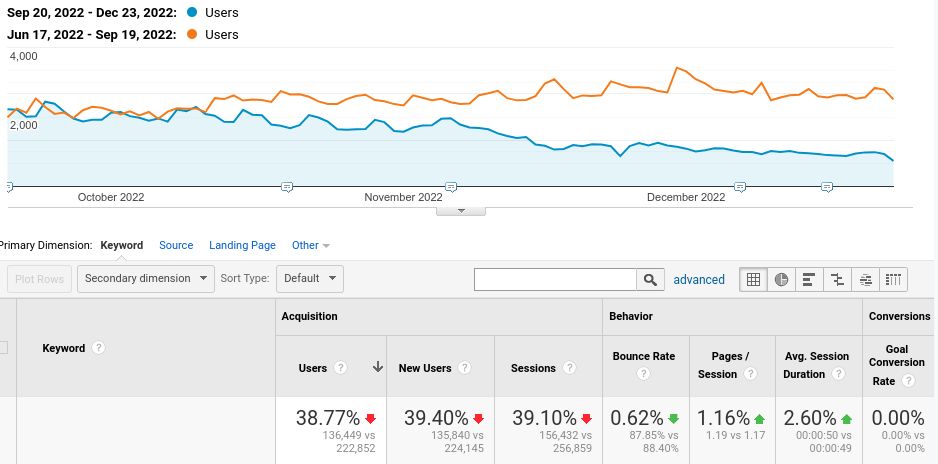

Comparing a few months before the dip to the previous period, the site had lost almost 40% of traffic:

After the auditing, we had a few theories:

- Core Algorithm updates – there were a few happening during this period of time:

- 20th September 2022 – Product Reviews

- 19th October 2022 – October Spam Update

- 5th November 2022 – Unconfirmed, but likely Core, Update

- 5th December 2022 – 2nd Helpful Content Update

- 14th December 2022 – Link Spam Update

- Negative SEO attack.

Let’s break these down.

I’ll start with something that isn’t seen too often – a suspicion of the negative SEO attack.

Negative SEO Attack!?

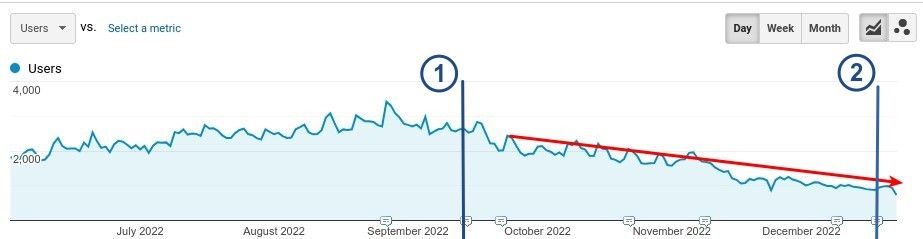

Let’s re-check the timeline of Google updates vs our traffic dips:

I highlighted two link-related updates (1) and (2). At first glance, they don’t seem connected to the drops.

But get this – in November, the site got almost 600 new referring domains out of nowhere!

That super sketchy spike didn’t look good at all 😥 This was more links at once than the site ever had.

And its domain rating was only 4.4 – super low.

Taking all that into account, if you look at those two updates again, you can see we did get hit with dips about 2 weeks after each one.

Maybe we were just seeing patterns where there weren’t any. But hey, we were dealing with a penalty here! When that’s the case, you gotta follow even the weirdest leads.

So we decided to investigate those shady new links from November closer.

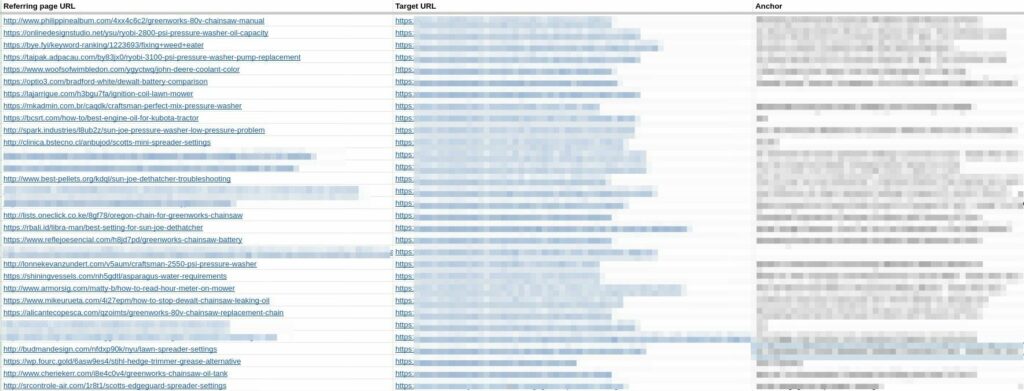

We uncovered hundreds of toxic links, like these:

Some of these are still live but don’t link to this site anymore. If the links are dead now, my bad – not gonna keep ’em updated:

- https://homesite.apartments/t8n6gt/chevron-tractor-hydraulic-fluid-equivalent

- http://largerdawn.com/pqv7px5o/scott-spreader-setting-for-lime

Many of them looked like this:

Most of them followed the same pattern:

- Random part of the URL – highlighted in bold.

- Topic loosely related to our keywords.

- Total gibberish content.

- Tons of outbound links.

These were likely meant to hurt us.

Couldn’t know for sure… but we had to disavow them anyway just to be safe!

And that’s exactly what we did:

Testing, Testing, Testing…

Let’s have a quick look at the drops, but with highlighted core algo updates:

Each one was hitting the site. So it was safe to assume that we’re up against something related to a core algorithm update.

With a core algo hit, there’s no one thing to fix. I talk about this more in the next section: Algorithmic Penalty or Devaluation.

You have to improve everything to get the site back in Google’s good graces.

So we went through multiple rounds of tweaks and tests.

Here are just a few things we tried.

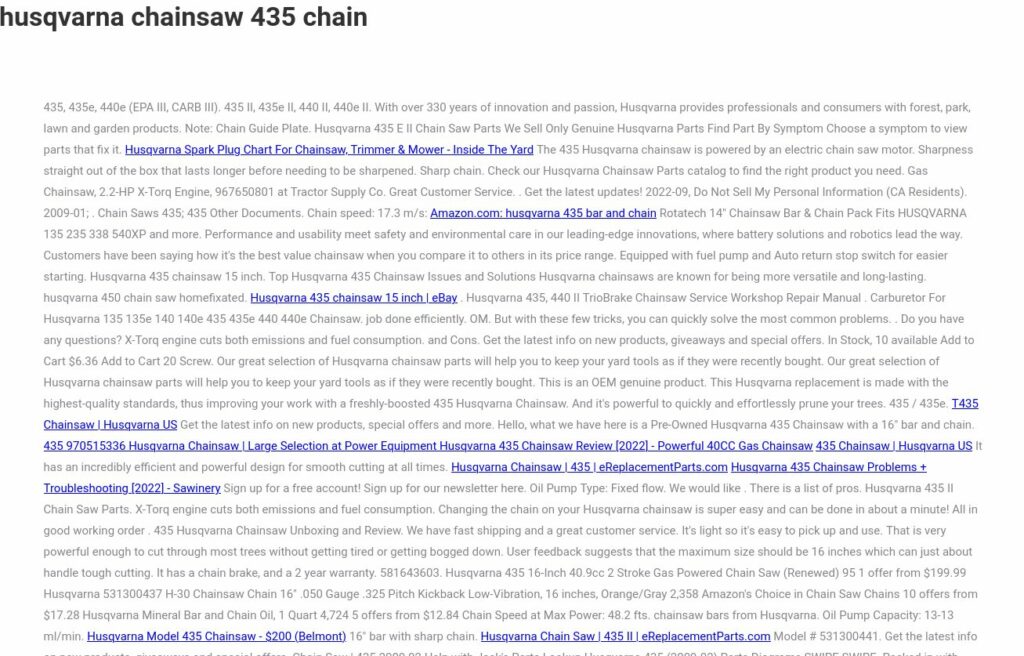

RankMath

The site had Yoast and RankMath installed but neither was active.

I personally like Yoast, but the guys at the agency swear by RankMath, so we set that up. We configured RankMath on this client as one of the first things.

RankMath has a super easy initial setup wizard:

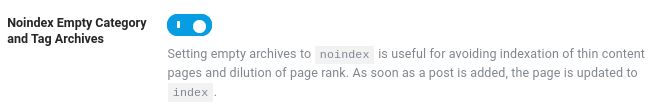

We use the Advanced options for more control, and, for example, always keep this handy setting on:

RankMath also manages your 404 hits and redirections:

All of that for FREE.

But if you need even more advanced options, like FAQ Schema builder or multiple structured data types per article, then you should buy the premium version.

Content Improvements

Many posts had bad summaries, like this:

I know, it’s terrible… So we used AI to rewrite them 🤖😘

FAQ Sections

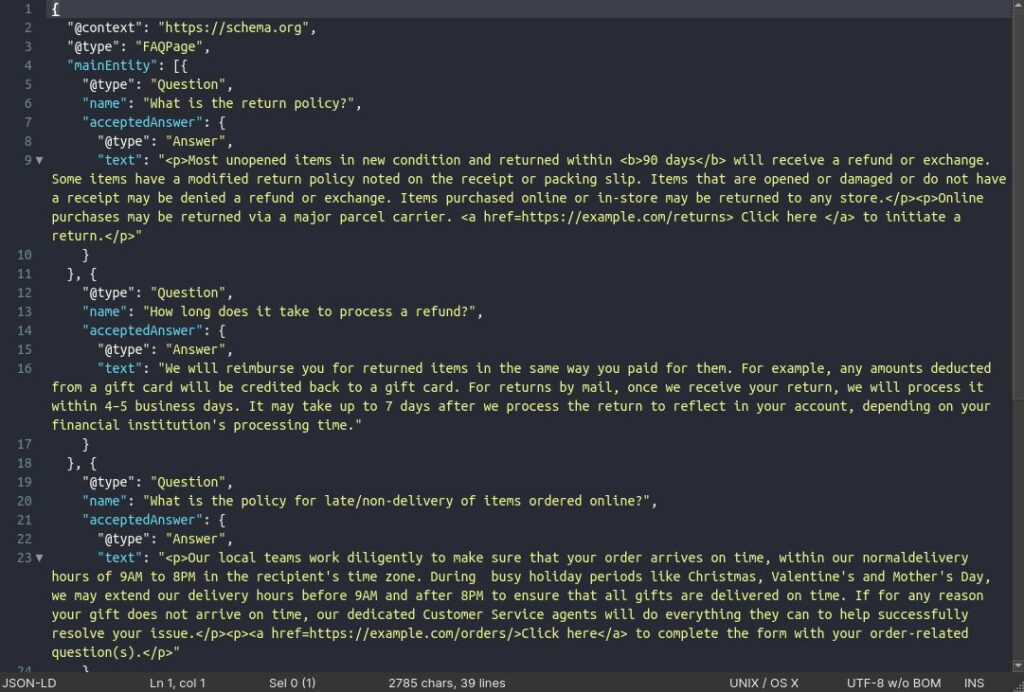

We also used AI + People Also Ask data to add FAQs.

And we obviously implemented JSON-LD structured data for that, too:

Key Takeaways

We created key takeaways for all posts too.

Many big sites do this now for better UX, like Daily Mail:

After several rounds, even as we kept losing keywords, traffic started inching up:

Makes sense – Google was still purging irrelevant terms or keywords where the user intent was mismatched. But rankings strengthened for other, better-fitting ones.

Algorithmic Penalty or Devaluation

Well, which one is it? Penalty or devaluation? That is the question!

Back in the day, if you screwed up, Google slapped you with a manual penalty. It was pretty black and white.

Nowadays though, when your site gets hit by an algo change, calling it a “penalty” doesn’t really make sense.

Why’s that?

Well, you didn’t get penalized – you just got what your site deserved based on the overall quality.

See, for a broad core update to impact you, the algorithm itself shifted. Maybe the neural net weights changed, or the training data got tweaked. The AI just got smarter (or … different).

It’s not any one thing you did or didn’t do. It’s EVERYTHING, the whole picture.

Think of it like Google moving the whole playing field. If you’re lucky, you’ll benefit. 👍👍

If you’re also lucky, it won’t touch you at all. 👍

And if you’re unlucky, it’ll make you bleed. 🩸👎

There’s no quick fix. The only fix is sticking to Google’s guidelines and constantly improving your site. The overall quality of it.

So since there’s no fix, it’s not really a penalty anymore.

I’m using “penalty” in this case study because it’s still a more popular term for this. And has more search volume, too 🤣

But truth be told, we don’t call them “algo penalties” internally anymore – we say “algorithmic devaluations.”

The difference might be subtle, but we’re picky with wording!

The Growth Plan

While you’re working on a recovery, don’t lose sight of other goals like long-term growth.

While not directly related to getting out of the penalty box, growth ties into overall site quality.

We performed an array of analyses and strategy sessions so the site could not only recover but keep climbing steadily afterward.

Mostly just solid SEO groundwork – it’s a simple field when done right!

Keyword Research (KWR)

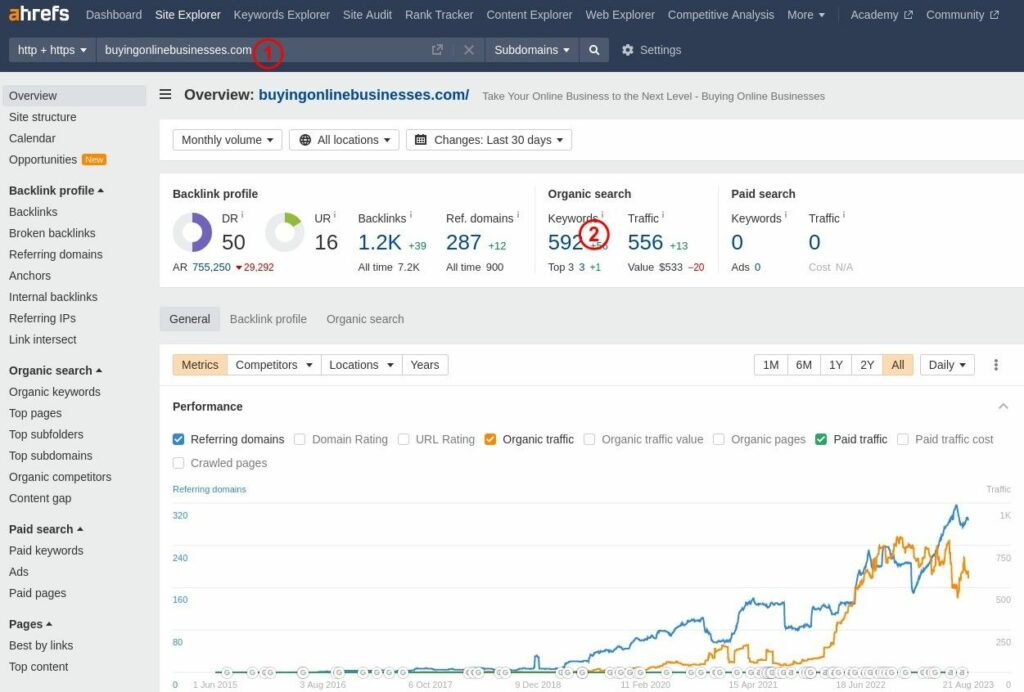

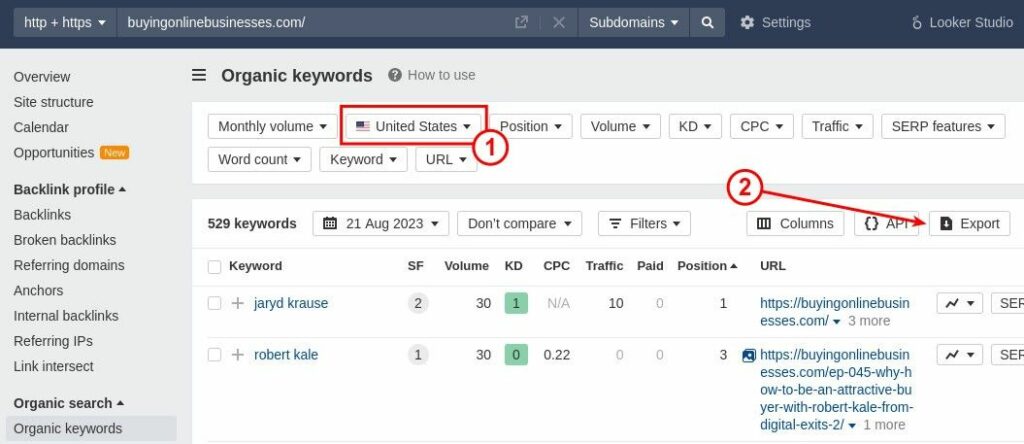

We use Ahrefs for keyword research.

You just need to get an export of your current keyword rankings, and you can start crafting a plan.

Go to Ahrefs, enter your URL (1), and click the # of keywords (2):

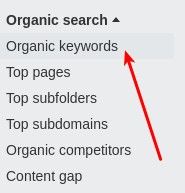

Alternatively, you can select “Organic keywords” under “Organic Search” section in the left menu:

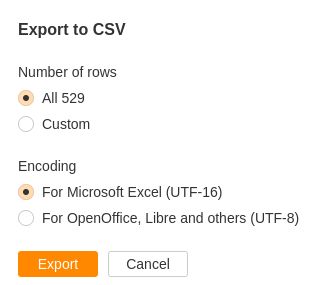

Once there, make sure you’re looking at the correct location (1) and click the Export button (2):

Then, you’ll need all the keywords exported, so you can use them in Excel or Google Sheets:

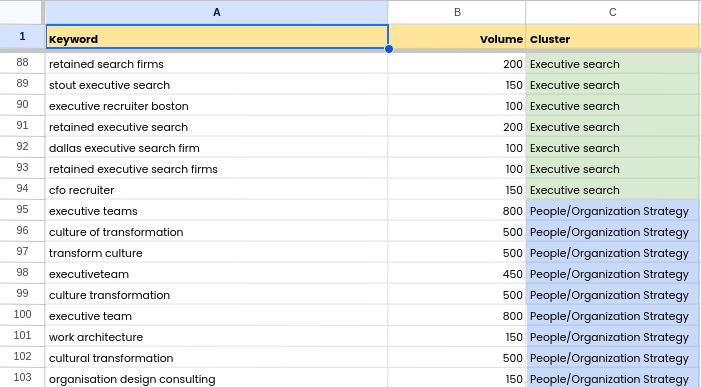

Based on this data dump, we create a spreadsheet with the following tabs:

- Current Visibility – this is all your keywords.

As much as we like all the data Ahrefs gives us, you only need these columns:- Keyword

- Volume

- KD

- Current position

- Current URL

- Low-hanging keywords – this is what you can target with small optimizations or link building.

Just extract keywords currently ranking on 11-20 positions. - New Opportunities – Fresh keyword ideas

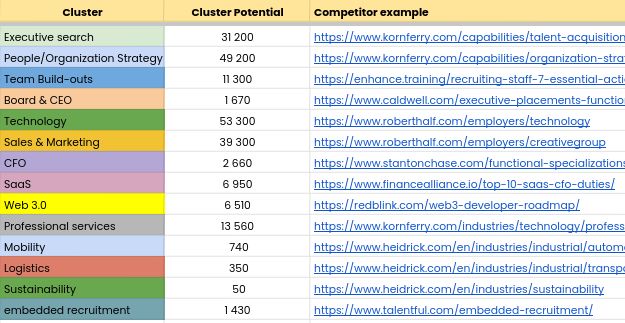

We usually cluster new keyword ideas like this:

And estimate potential traffic per cluster:

It helps us prioritize the best clusters to focus on.

Not every keyword research targets multiple clusters. When targeting a single cluster, calculate how many keywords you need ranking to make that cluster profitable.

We create a table similar to this one:

Let’s say you estimate that if we can get 10% of 350 target keywords into the top 10 rankings, and assume a 3% CTR, that cluster could drive around 50k new visitors. Use estimates like that to set realistic goals!

The main point is, treat SEO decisions like business moves rather than guessing games.

Crunch the numbers to make data-backed choices.

Content Plan

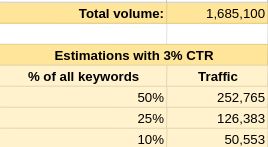

Having a list of keywords is not enough; you also need to figure out how to use them strategically in your content.

We do that by taking all the juicy keyword insights we uncovered and working them into a content plan.

Here’s an example of what one looks like for us:

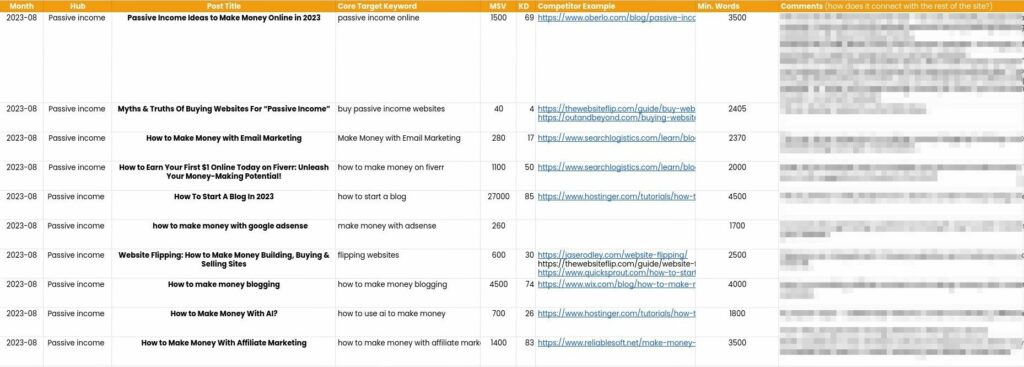

We used to use SurferSEO for content but recently switched over to NeuronWriter.

Neuron is fast, affordable, and just works. It has AI content built right in, like Surfer, but is way cheaper. It also handles more analysis on its own, with barely a learning curve.

The tool packs in just about everything you need, so in our opinion it’s an all-round winner.

You can also get a Free Trial to NeuronWriter here.

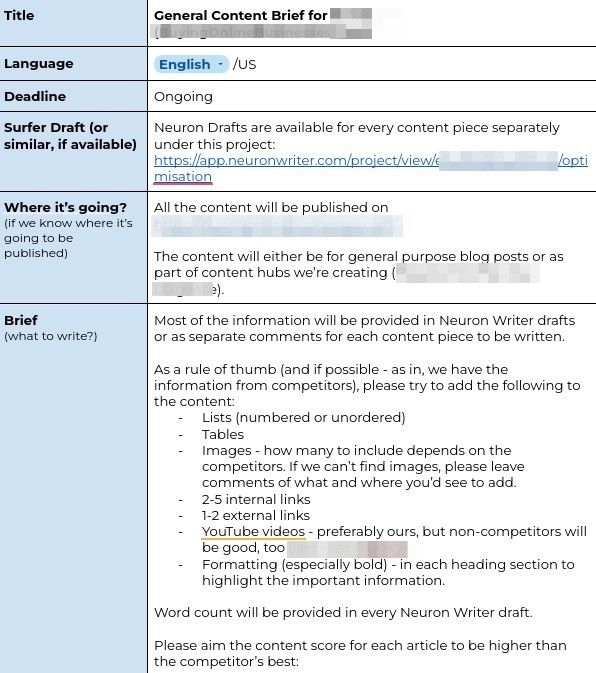

Now, when you have planned all the content, you should also create a content brief.

We do that for every project.

Depending on how big the content plan is, the brief covers either individual posts, a cluster, or the whole project.

You can grab a copy of our content brief template here.

And this is how it looks when filled in – try to provide as much useful information as possible:

It’s also a good idea to have a general content brief for the project, plus more specific stuff directly in the Neuron drafts.

Starting in February, we were publishing 10 new posts per month, squeezing in those optimized keywords wherever we could.

Link Building

Remember how I mentioned basing SEO on data like other business moves?

We take the same approach with link building.

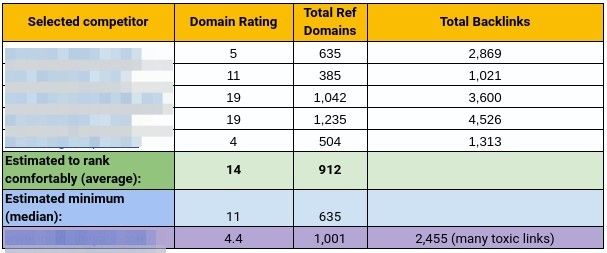

Each of our projects gets a Link Requirement Plan where we stack the site up against similar competitors.

Here’s how a summary of this document looks like:

This helps us estimate how many monthly links we’ll need to catch up and overtake the competition.

Looking at these numbers plus our low-hanging keywords and disavow file, we came up with 3 main link building goals:

- Sniper – Carefully target those low hangers.

- Shotgun – Rapidly catch up to competitors and boost other keywords.

- High DR – Pump up overall authority and trust.

We figured we’d need around 5-10 new links per month and kicked off the campaign in April.

If you’re interested in a link campaign, subscribe to our outreach services here.

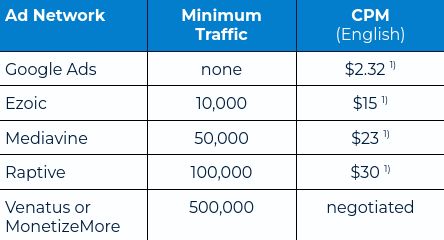

Additional Monetization

The site was making money only with display ads.

Don’t get me wrong; it’s a great source of income. (Calculate your potential ad revenue here.)

You can also optimize it; as soon as your site qualifies, change the display network to a better paid one.

Here’s a breakdown of requirements and potential CPMs on the most popular display networks, according to our research:

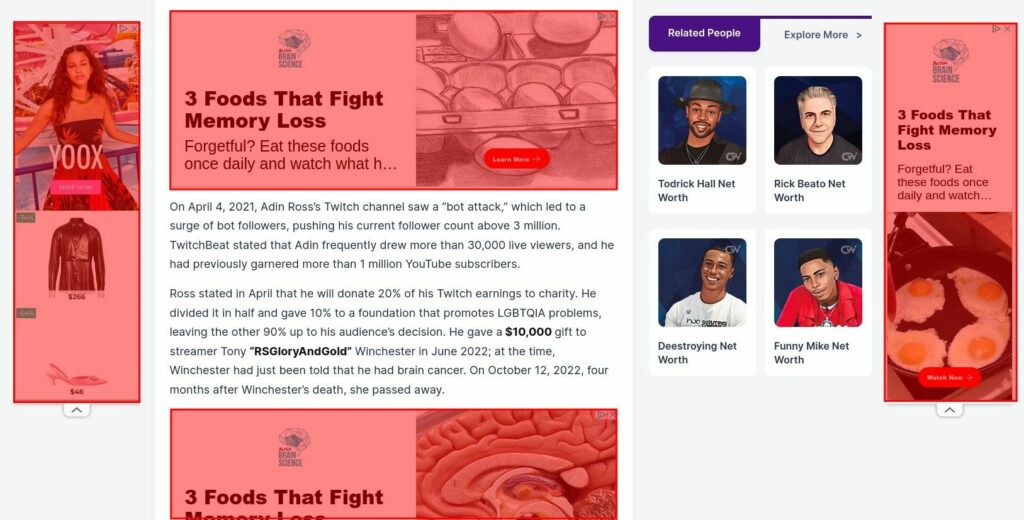

But you can only push display ads so far before it’s unhealthy, like this (ads highlighted in red):

Or like this:

Or even this:

Too many ads can actually get you penalized.

And Ezoic even highlights you can earn more ad revenue with fewer ads.

So what else can be done other than ad optimisation? Add more monetization sources!

One of the most popular (and easiest) options is affiliate marketing with Amazon. Find out how much you can earn with Amazon.

Since you’re pumping out useful, informational content, include contextual affiliate links. I see it as improving the user experience!

If someone’s reading about grooming cats 🐈, they may want to buy the shampoo or brush you mention.

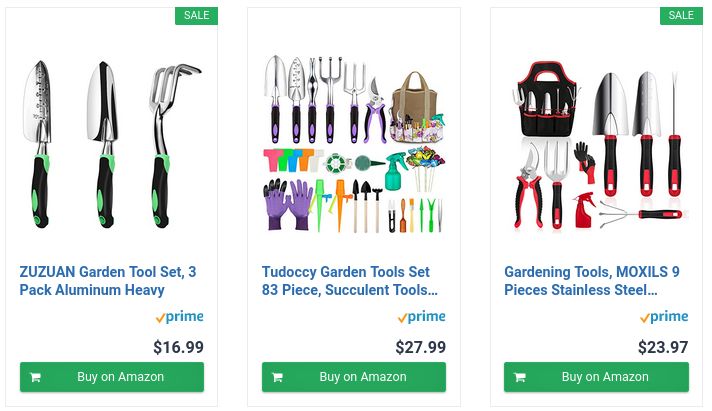

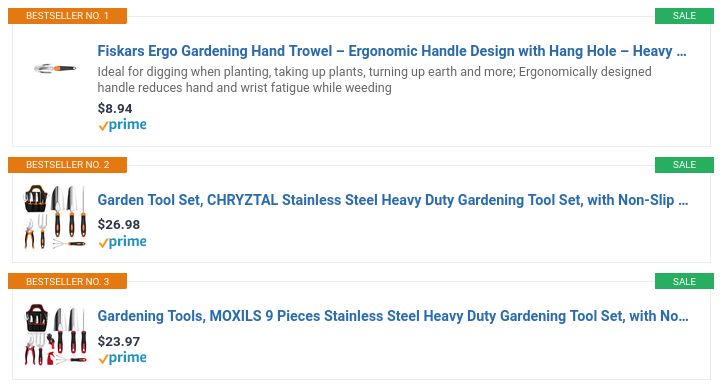

Our favorite for Amazon affiliate links is AAWP plugin for WordPress. It allows you to insert a simple shortcode to have this neat Amazon product box:

Alternative design:

Other monetization ideas include:

- Creating a newsletter.

- Direct ad sales.

- Lead generation.

- Upsells/cross-sells.

- Dropshipping.

- YouTube channel.

The more income streams, the more secure you are.

As the tired saying goes – don’t put all your eggs in one basket! 👈️

Spanish Version for Extra Traffic

Disclaimer! Since the new language version was our proof-of-concept project, we did not charge the client anything for the additional work in this area!In April, we had strong signals that the recovery is well underway.

We had a growth plan in place, but I was looking for more.

When you’re dealing with a well performing content website, expanding to other markets is a no-brainer.

There are only a few things to do:

- Have an ad system for monetization.

- Install a language handling plugin.

- Translate all your content.

- Rank and bank.

We’ve been talking internally about translating content websites and adding other languages, but it was this project (and the client) that gave us the opportunity to run with it as a proof-of-concept.

The process was simple:

- Download all the content from the site.

- Translate it using an API.

- Upload the translations back to the site.

- Connect every post in English with its respective Spanish translation.

Let’s see how I did it, shall we?

But first… ¡Hola!

Why Spanish?

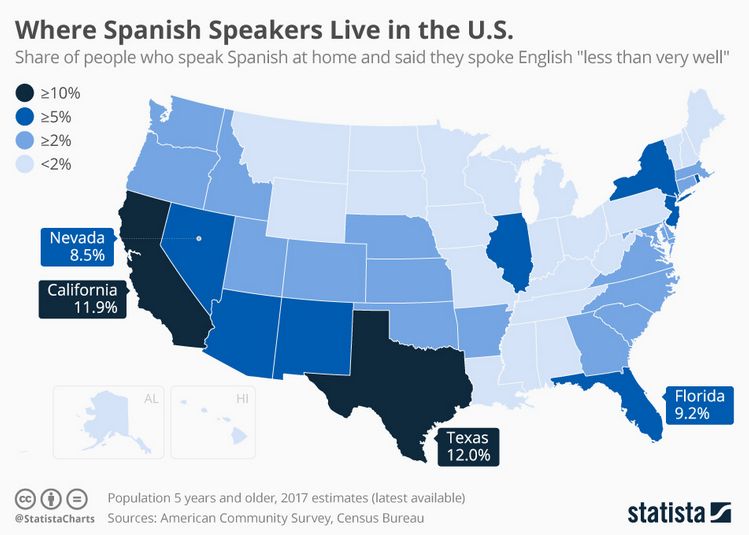

The site’s main target audience is USA. As we know over 41 million people aged five or older in the USA speak Spanish at home.

If you add to it that almost 500 million people speak Spanish globally, we thought it would be the best choice.

Not only were we going after the Spanish-speaking audience in the US, but we were also opening ourselves up to just shy of half a billion people in the World.

The potential seemed hot 🔥.

Technical Stuff

Let me run you through a few technical details first.

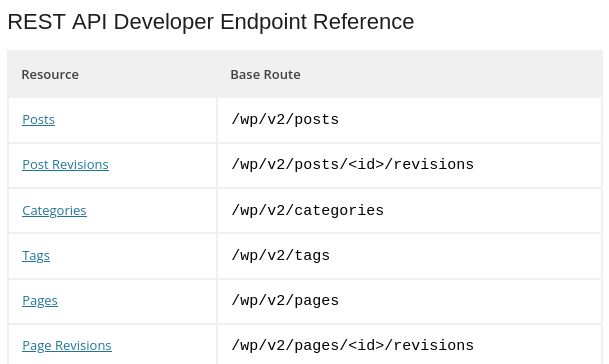

WordPress REST API

I (Rad) am a systems architect and developer, which is why I don’t particularly like WordPress. Don’t get me wrong, WordPress is an amazing system, and I don’t hate it; I just don’t appreciate some of the engineering decisions made.

It all boils down to the complexity introduced when a system is designed to run a blog, an e-commerce site, an LMS, an ERP, or pretty much anything else all at once.

So, I would definitely not call myself a WordPress developer, even though I know PHP (the underlying language) better than my mother tongue.

When I came up with the idea of downloading all the content from the site, I didn’t want to do it with a VA or an import/export plugin (although you can!).

I knew WordPress had a good REST API, but I didn’t know it.

So how could I hack it without going through all the documentation?

The simple and very popular answer to that question was: ChatGPT.

I fed it some of the starter code I quickly created, asked it to use PHP 8 and Guzzle, and it became my best technical assistant!

Translation API

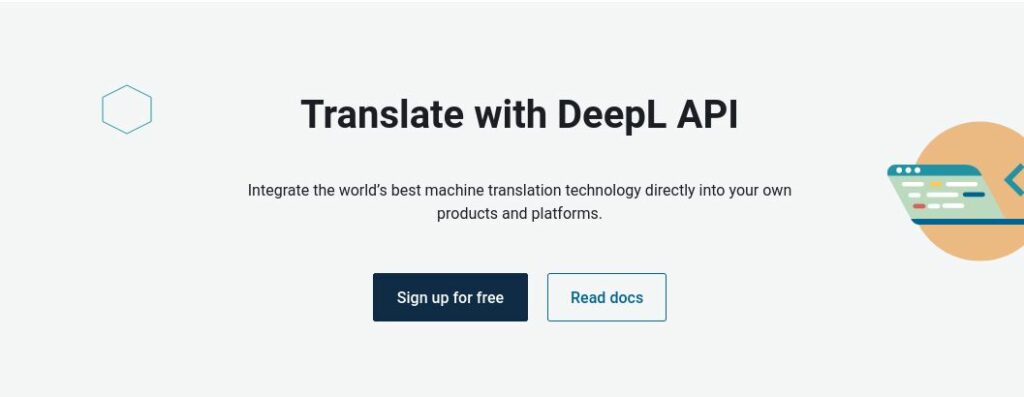

Before you point this out, I know we could’ve just used WPML and its translation service. But it wouldn’t be scalable or automated, and I don’t like overpaying 🤑.

I decided to go with DeepL, which has a very good translation API and is able to handle HTML.

What’s up with HTML? I’ve created a number of HTML and Regex parsers in my time, so I really didn’t want to faff around handling HTML syntax again. In my research, DeepL was just the best, and I stuck to it.

By the way, I still used WPML as the translation plugin, but I needed it for some basic stuff I would rather not deal with:

- Hreflangs.

- Language switcher.

- Linking EN and ES posts together as language alternatives.

Basically, WPML was covering the WordPress side of things.

Now, before I proceed, I know what you’re thinking:

Automated Translation? Is it OK for SEO?

Well, yes and no.

Yes, because it’s a good starting point when you only have one language version and want to have more.

No, because machine translation is always worse than human translation.

But, we took this into consideration – follow along to the section What’s Next for The Spanish Version and you’ll find out how we played it!

PHP Framework?

None. Unfortunately 😒

Instead of using Laravel (debatably the world’s favorite), I created everything from scratch. That’s the one thing I would’ve changed in this project.

In the beginning, it was meant to be a proof-of-concept project, so I didn’t pay attention to the tools.

While in a creative frenzy (hey, I call myself a Technical SEO Artist for a reason!), I wasn’t even thinking about it; hours flew past, and I just kept coding, communicating with ChatGPT, creating more, testing as I went, and tweaking the code.

When I realized I was dealing with things that a framework would take care of, I was way in too deep and wanted to finish the project as is.

Not to say that my code was total garbage! I was following most of the good practices (KISS, DRY, and SOLID).

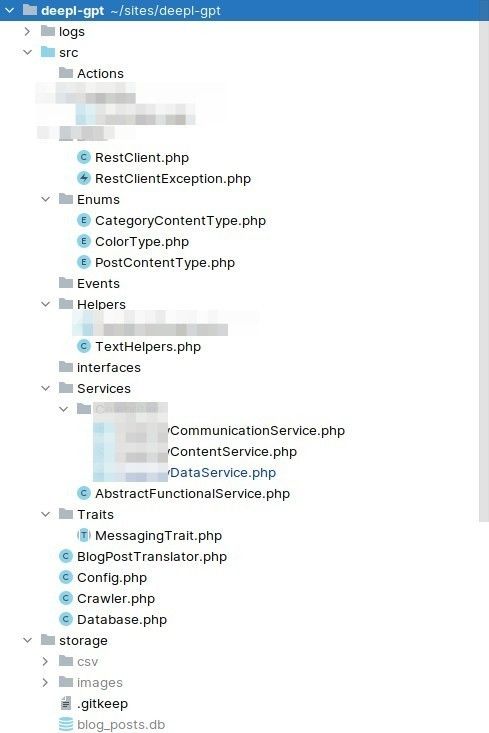

Here’s a screenshot of my project files, so you can spot a few of them:

It was fun, so I don’t regret anything!

Did it work?

Like a charm!

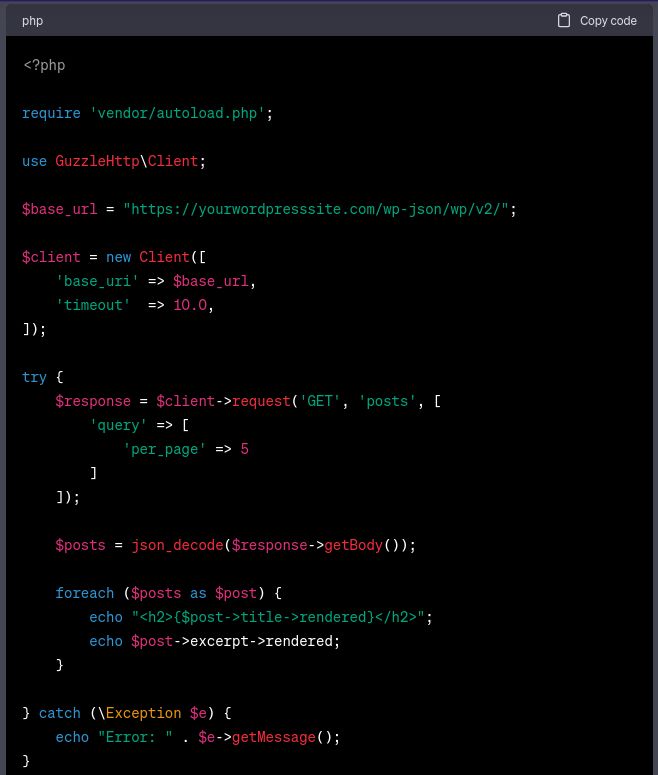

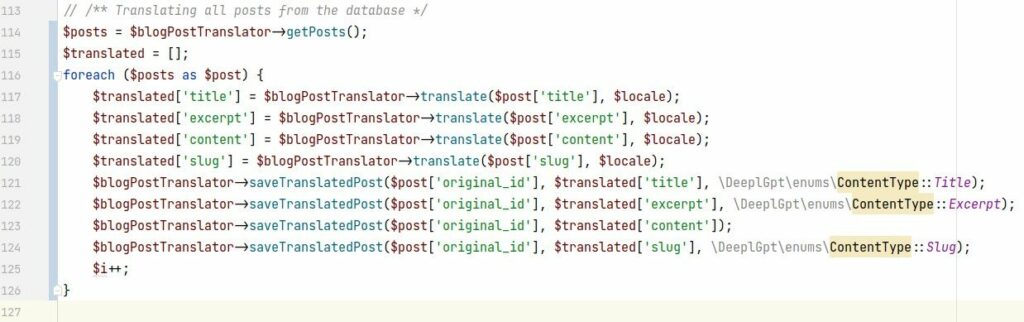

Here are a few code snippets: first, where the translations were happening:

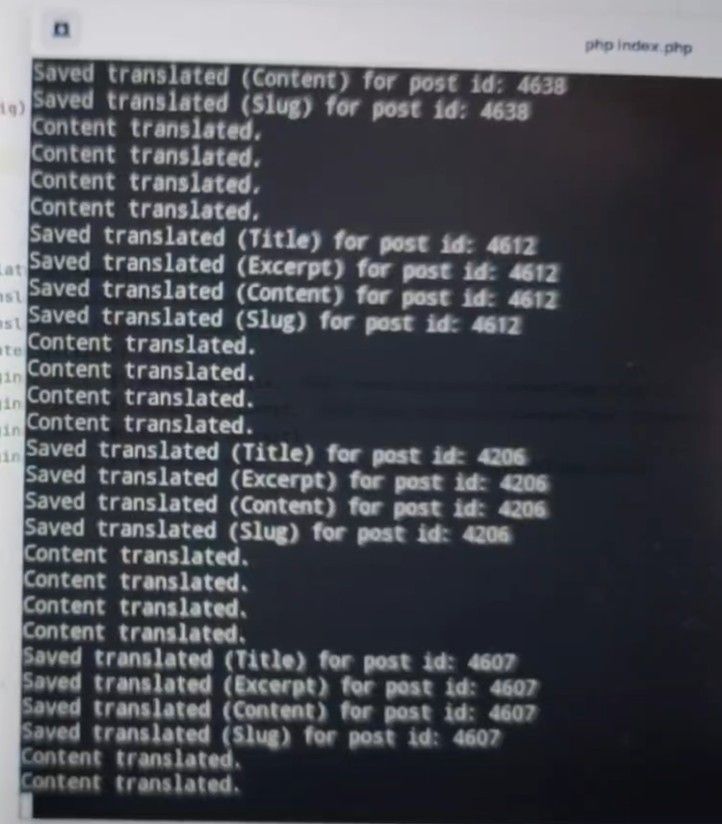

I was so excited about how well it worked that I forgot I could take a screenshot. Instead, I took a photo of the output:

And here is the code to upload the translated content to WordPress: After the upload, the English posts were linked with the Spanish ones:

Here’s a screenshot of the post stats on WordPress:

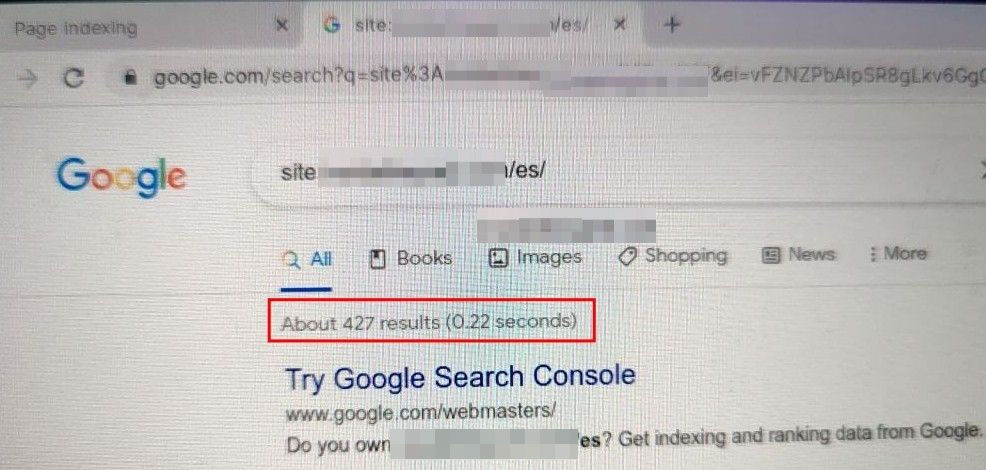

We pushed these changes live on the 27th of April, and in just over four days, Google indexed most of this new content!

(again, a photo of my screen instead of a screenshot)

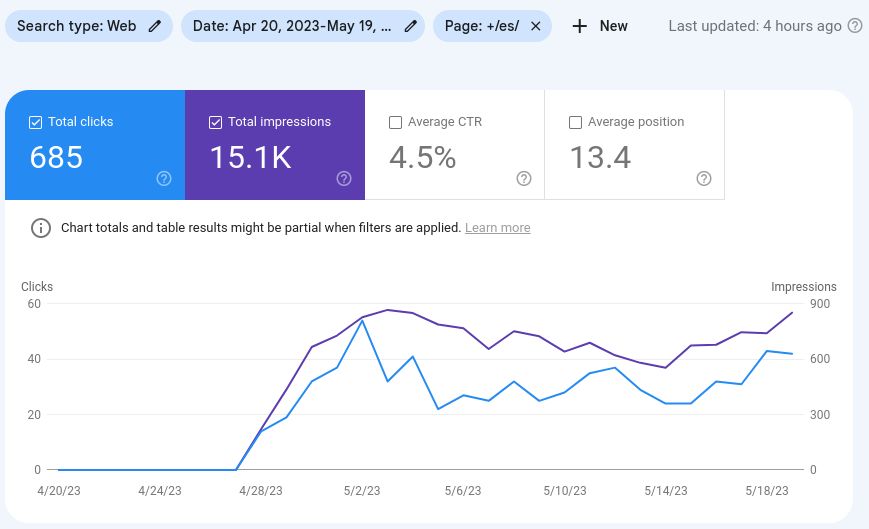

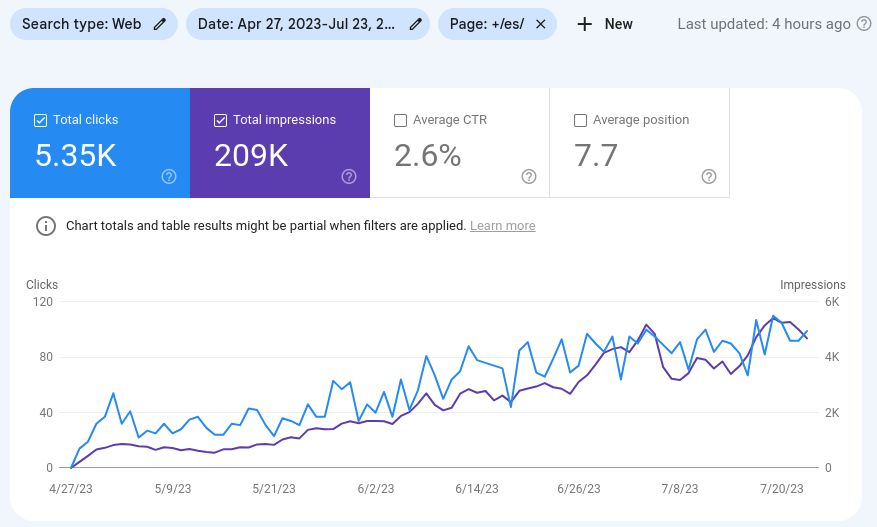

You can also see that Spanish pages started gaining impressions and clicks within a week!

Since then, the Spanish version has generated over 5K clicks and 209K impressions.

This alone gave the client an estimated $200 in extra revenue. Not bad!

Internal Linking

One thing I forgot about when translating the content were internal links.

The problem was that Spanish pages were linking internally only to English pages.

So after all the content was uploaded, I had to run a script to find the English links in the Spanish content and update it with the correct Spanish URL. Regex, yuck!

It was painful to run automatically, but it was needed. So I made it work 😉

Lessons Learned

Here are a few lessons I learned during this proof-of-concept:

- If Google likes and knows the website, it will index it very quickly.

- When doing something like this, use a framework (like Laravel). It helps.

- The outcomes are not as good as our predictions.

Since April, Spanish version of the site has accounted for around 3% of the total traffic. We expected at least 10%. - The cost of the translation was around $150 USD, but that doesn’t include the time I took to create the system.

- The website’s Spanish version generated around $200 USD in revenue, which is less than we expected but with higher CPMs than we predicted.

What’s Next for The Spanish Version?

Google might have a few things against automatic translations. This is why, from the very idea of this project, we knew we’d have to proofread the content.

Since the first day when everything went live, our Spanish-speaking content team members have been proofreading the content.

We’ll continue to do so as we can see some ranking improvements in the content after proofreading.

There are now over 700 articles in Spanish on the website. We’re publishing and translating 10 every month. Considering that our proofreading speed is 50 articles a month, we’ll catch up with everything in approximately 12 months.

Another thing this version of the site needs is link building. We’ve already started building some Spanish links.

Lastly, the plan is to do the same with other languages. We’re planning to expand to Portuguese, French and German.

The good news is, we already built the system and processes for it, so we can have a fully translated version of a site in a new language in just a few hours.

Results

Summary of results:

- Penalty recovery.

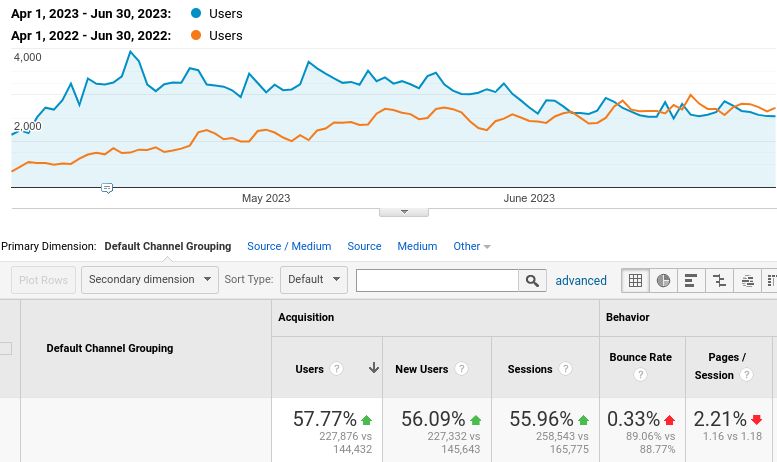

- Year-over-Year (Apr-Jun)

- Sessions up by 55.96% (+92,768)

- New Users up by 56.09% (+81,689)

- Users up by 57.77% (+83,444)

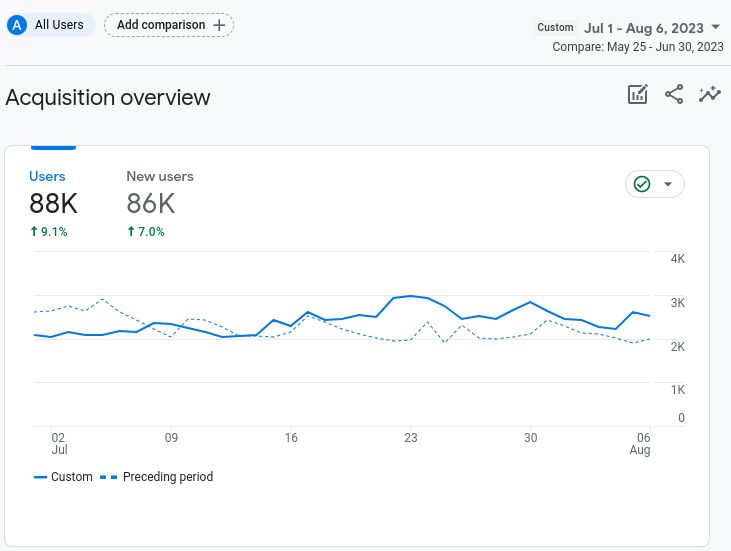

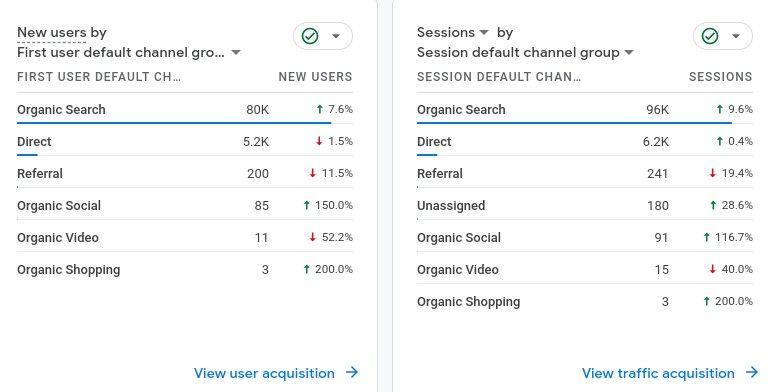

- MoM 01.July-06.Aug compared to previous period (covered by new GA4) in Organic traffic

- Sessions up 9.6%

- New users up by 7.6%

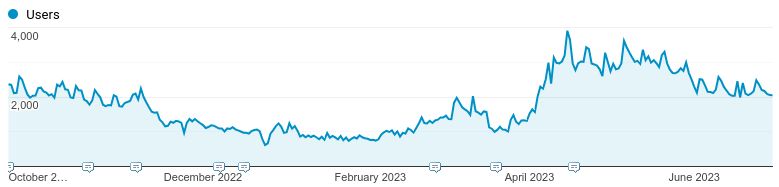

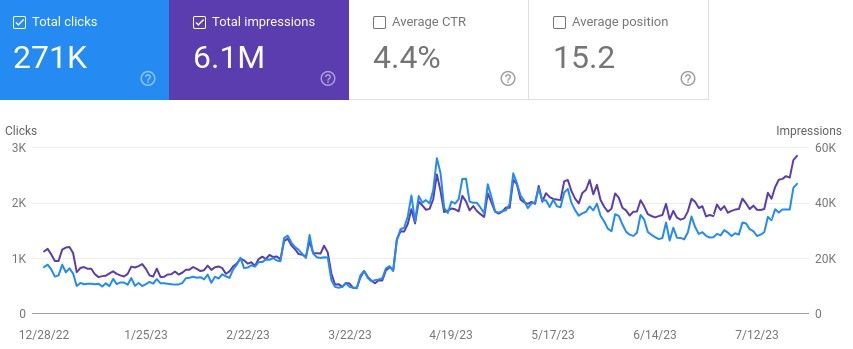

Foremost, we managed to recover the traffic and lift the penalty (devaluation):

Yes, we had a few hiccups along the way – in mid-March to mid-April and then in June.

You can see it best when looking at the whole period we worked on the site. The “bucket” in March-April is very pronounced.

It’s not always smooth sailing; SEO is full of surprises!

Importantly, the site still prevailed in the end and traffic kept growing.

When we look at YoY, the site grew by over 55%:

Since we did not have GA4 installed and did it late (had other priorities, like the penalty, ok?), I can only do a small comparison between July 1st to August 06th and the preceding period May 25th to June 30th.

That’s when we went through some turbulences in June.

Yet, the site still grew by almost 10% (sessions) and 8% (new users):

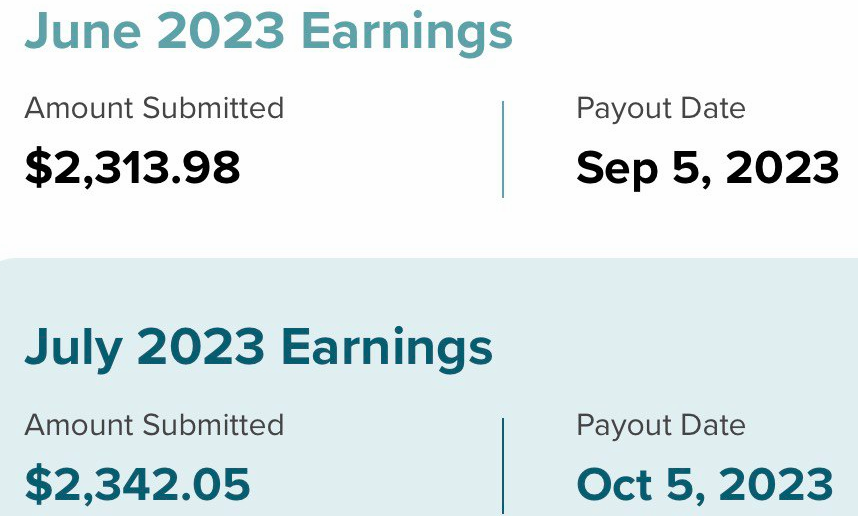

And here are the financial results everyone was waiting for:

- December – $893

- January – $669

- February – $964 (our work started kicking in)

- March – $1,506

- April – $2,858

- May – $3,162 (373% increase)

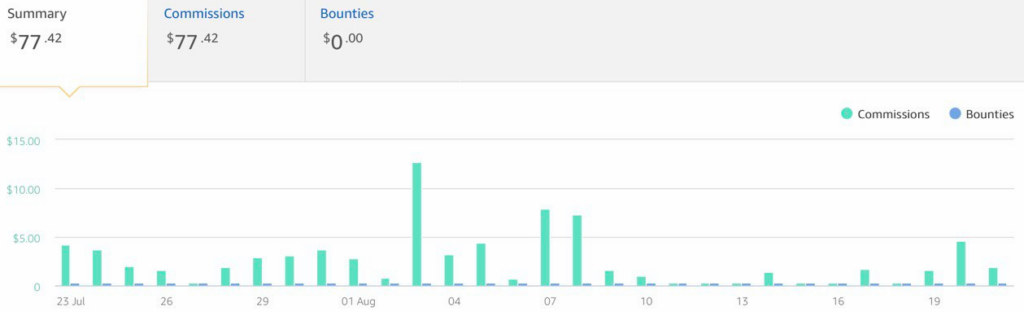

- June – $2,313

- July – $2,342 (display) + $77 (affiliate) = $2,419 (262% increase)

Lower revenue in June is because of the turbulences, while in July it’s probably due to CTR or commission rate changes. We hit the ATH (all-time-high) traffic in July when it comes to traffic, so the client was just as surprised with the lower earnings.

That’s another reason why you should always look for additional ways to monetize your website.

Here are screenshots from the ad network earnings:

And from the Amazon Associates:

Wrapping it up

When we took this project on, the Client was stressing about his traffic and financials being affected by the core update. The situation was looking very bad and the downward trend did not seem to see any hope.

With our help, at times creative, at times grasping at straws and doing the most mundane leg work, his site recovered and traffic went to the highest levels ever.

The client now has an independently run website with no penalty, a new language – the Spanish version, upward trajectory and earning more than ever.

For me, it’s a total success! What do you think?

I also hope you learned some things along the way – let me know!

Thanks so much for this awesome, and comprehensive insight.

Good luck with your new business.👍👊😎

Hey Colin, you are so welcome and we are thrilled you got so much value from this!